Voice

About ±40 years ago, we had something new in the telephony market: IVR. By pressing one or more of the 12 keys on a telephone, it was possible to answer a question asked “by the telephone”. You may think of "for the Purchasing department press 1, for the Sales departments press 2". It became a great success and hundreds of companies all over the world offered this kind of "automatic dialogues". Of course, the choice of input was limited, you could not offer more than ±6 different options per question and a maximum of 3 or 4 layers deep. Moreover, the flexibility of these systems was limited and it took a lot of time (and money) to change them.

But still... it became a great success and it is still used in many services. In the early nineties, speech recognition became available for English (America and England) followed a few years later for French, German and Dutch for commercial purposes.

Of course, the first dialogues were actually a kind of IVR with speech, but when the technology matured, so did the spoken dialogues. And when “slot-filling” allowed you to fill several slots at once (e.g. "tomorrow morning” “from Utrecht” “to Enschede") pioneering companies started to see the value of Speech Recognition and (slowly) switched from IVR to ASR.

At the beginning of the 21st century, speech recognition became more and more accepted and ASR started to take over the dominant role of IVR in the world.

Over the years, speech recognition became not only better (less recognition errors) but also smarter (more words). Instead of a "grammar" drawn up by the system developers, LVCRS (Large Vocabulary Continuous Speech Recognition) enabled the way to “say what you want to say, we will recognise it”. With LVCRS, people could "just" speak and a smart NLP (Natural Language Processing) unit had to extract the meaning of the message from the recognised speech.

With AI becoming available for commercial use (±2010), a lot changed! DNNs (Deep Neural Networks) for speech recognition (Microsoft, 2014) decreased the WER (Word Error Rate) drastically in the following 5 years. Today it is for clearly spoken American-English (and Chinese) at the level of humans. And yet... we humans almost always do better than machines in real situations. But why?

Text

In the late ‘90s and early ‘00s, chat services emerged. People could exchange written messages with each other in more or less real time via Messenger or other services. At first, it was something mainly used by children, but quickly elder people saw the usefulness of it and it slowly became very big. But... it was, certainly the first years, mainly a way of human-human communication: you wrote your friend a message, she read it and sent a reply.

But quickly there were questions about self-services: couldn't these written messages now be (partly) automated? And so, little by little, automated text services became available. You could type a question, which was then (semi-)automatically answered. And here too, AI made its appearance and the dialogues became more and more "human" and sometimes it is difficult to see if a human or a machine is answering your questions.

Both spoken and written dialogues usually do not yet pass the Turing test, but they are improving and can already be used successfully.

Language and Speech

Initially, the types of spoken and written dialogues were fairly far apart, but as technology improved and especially after the arrival of DNNs for both greatly improved speech recognition and NLP, chat and voice bots are fairly booming. You see them more and more at companies, government and other organisations. And, sometimes surprisingly, they also act reasonably to very well.

The popularity makes you think about combining them. After all, you spend a lot of time setting up a good chatbot, so why not make it accessible for speech as well?

Until ±5 years ago, this was not recommended. The written chatbot and the spoken voicebot differed in design and also served a (slightly) different target group. The spoken version was more focused on the speaker: what could an organisation do for him or her, or what did he or she have to do to achieve something. The written form was clearly more distant and was more about information about the organisation, about what they did and who they were. But the latter changed with the arrival of chatbots. The more formal way was often replaced by an informal way, the tone of voice changed and the range of things you could handle became wider.

It was clearly seen that chat and voice were growing together, making integration in the background obvious. And then things moved fast. Now, more and more companies are asking if their existing chatbot(s) can also be used for voice. And usually the answer is: yes, if… Because of course: there is still a difference between the two, although it is a lot less than 5 years ago.

Combining Voice and Chat

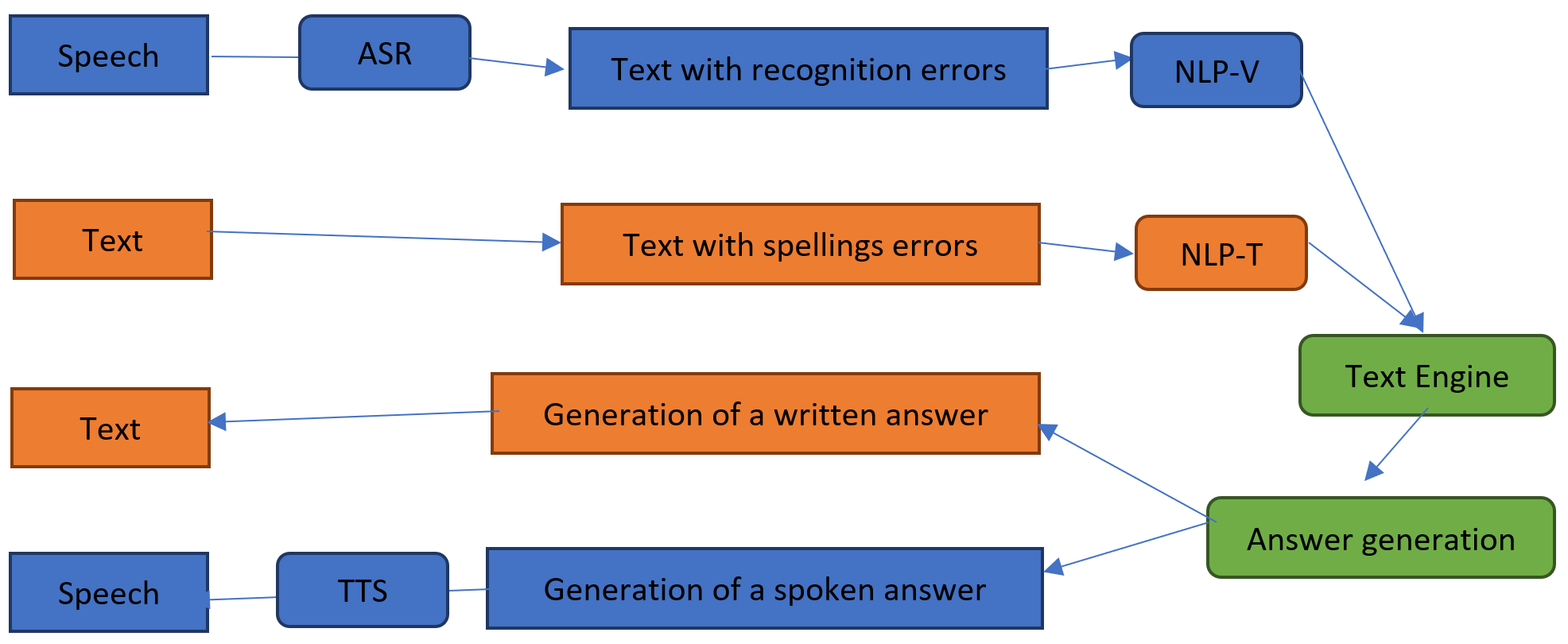

The difference between the two is clear: ASR to go from speech to text and TTS to go from text back to speech. Both techniques are rapidly getting better and better and especially with the TTS there is not much to worry about: the spoken text could perhaps be a little better, a little more natural and perhaps with a little more personalisation, but in general it is easy to understand.

With ASR and the subsequent NLP-engine, this is clearly different. People generally do not speak grammatically correct, stop halfway through a sentence, rename the subject or assume that what they are saying can be easily understood. Modern ASR-engines can convert the spoken text into written text, but the question is whether the NLP-engine can make sense of it. Of course: this also applies to the NLP-engine that has to convert the written message into something that makes sense, but as long as the writers stay reasonably close to writing down what they mean, this is usually doable.

Picture of the structure of a Chat and Voicebot.

Picture of the structure of a Chat and Voicebot.

The (scientific) focus is therefore on the step from "speech" to "understandable text". In other words, from recognising to understanding. This is a very fascinating but also difficult subject that we will discuss in more detail next time.

As far as the combination of Voice and Chatbots is concerned, we can state that, provided the complexity of the dialogue is not to great, it is generally doable. And we see this reflected in a strong growth of applications in which both channels are developed and used.